|

Nando Metzger I am a PhD student in the Photogrammetry and Remote Sensing Lab at ETH Zürich. My supervisors are Prof. Konrad Schindler and Prof. Devis Tuia. I like to work on computer vision problems with various applications such as monocular depth estimation, super-resolution, and remote sensing. I collaborated with the ICRC to map vulnerable populations in developing countries. Previously, I was a Student Researcher at Google working on 3D computer vision. Moreover, I interned and collaborated with Meta's Reality Labs Research. I obtained my bachelor's and master's degree in Geomatics Engineering from ETH Zürich. During my master's I specialized in deep learning, computer vision and remote sensing. |

|

|

|

|

|

ResearchI'm interested in computer vision, deep learning, and their applications to remote sensing. Most of my work is related to super-resolution, depth estimation, or both simultaneously. Some papers are highlighted. * indicates equal contribution. |

|

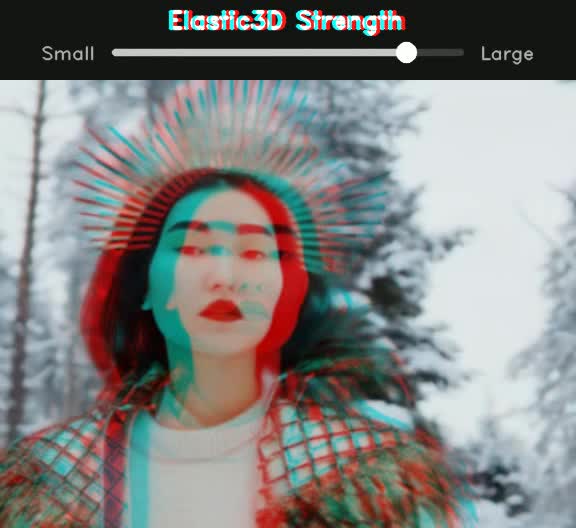

Elastic3D: Controllable Stereo Video Conversion with Guided Latent

Decoding

Nando Metzger, Prune Truong, Goutam Bhat, Konrad Schindler, Federico Tombari CVPR, 2026 project page / arXiv Elastic3D is a controllable, end-to-end method for monocular-to-stereo video conversion. Based on latent diffusion with a novel guided VAE decoder, it ensures sharp and epipolar-consistent output while allowing intuitive control over the stereo effect at inference time. |

|

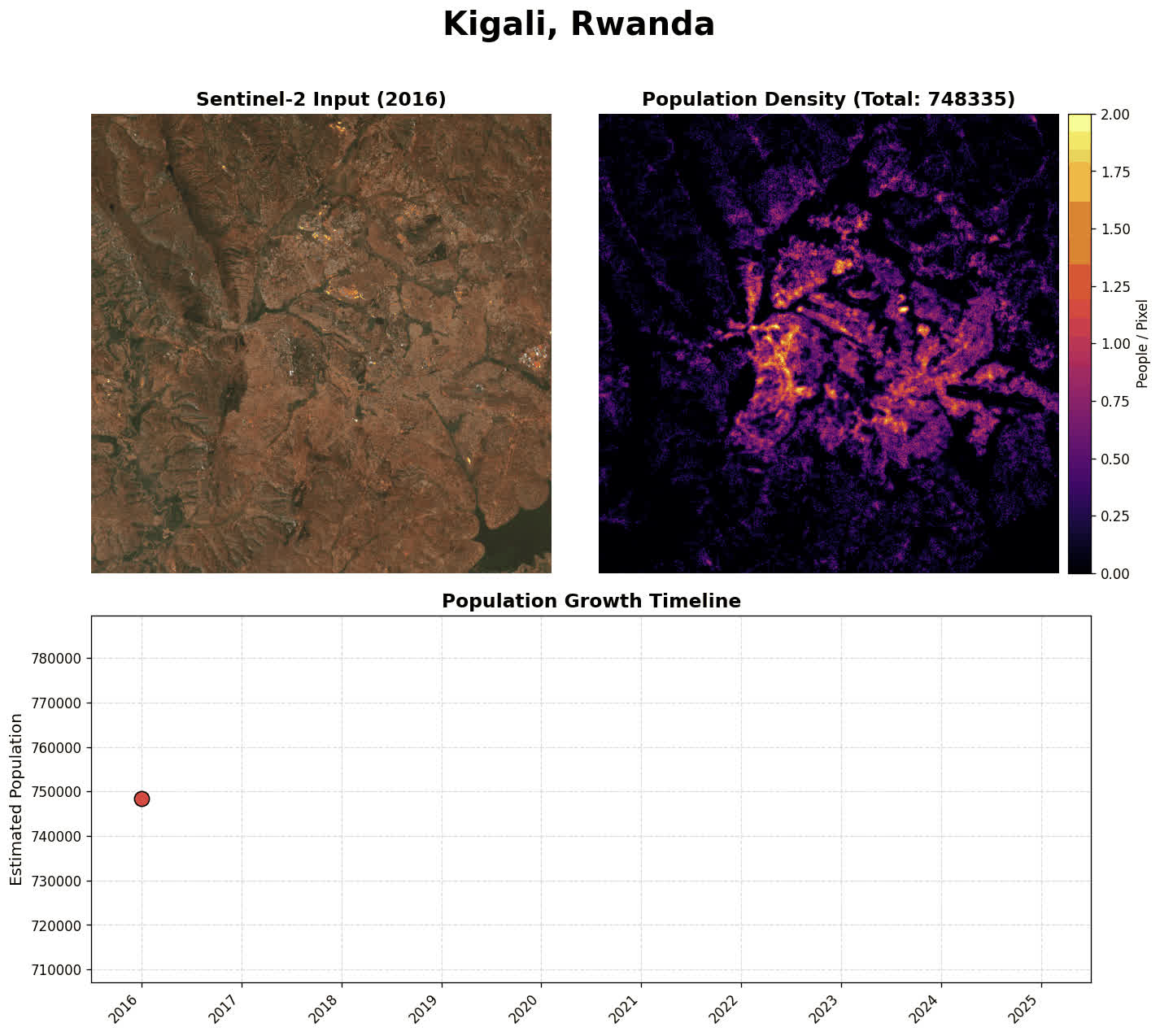

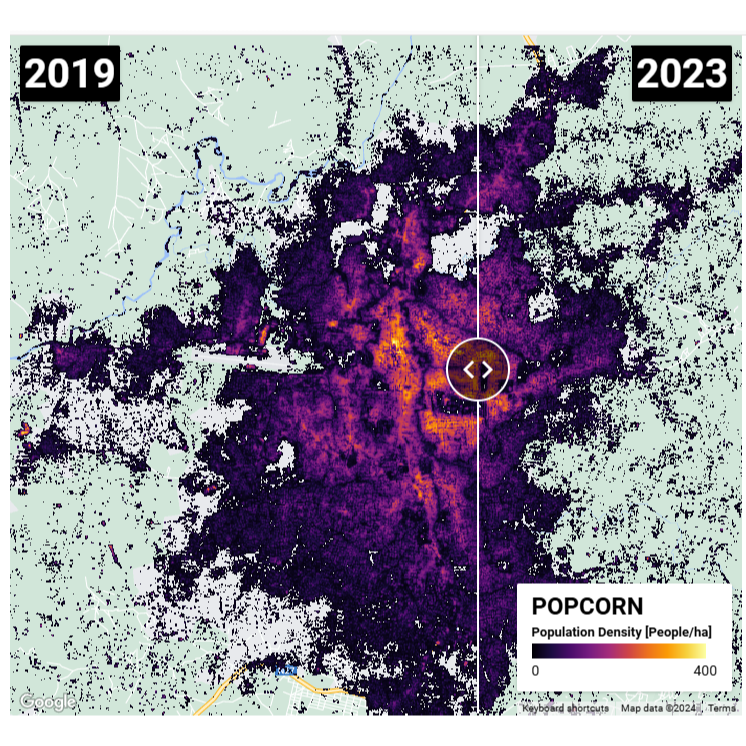

Bourbon 🥃: Distilled Population Maps

Nando Metzger GitHub Project, 2026 code Bourbon is a lightweight, distilled version of the POPCORN model for population estimation from Sentinel-2 satellite imagery only. By "distilling" over several Bag-Of-Popcorns maps into a compact backbone, Bourbon delivers fast, accurate inference suitable for large-scale mapping. We also provide an all-in-one version of the model that fetches imagery and performs inference in one command. |

|

ML-Bokeh: Monocular View Synthesis with Cinematic Depth-of-Field

Nando Metzger GitHub Project, 2025 code ML-Bokeh extends the SHARP codebase with physically-based rendering and smart autofocus for cinematic depth-of-field effects. It features synthetic aperture simulation, artifact-free spiral sampling, and an automated autofocus system based on subject detection. |

|

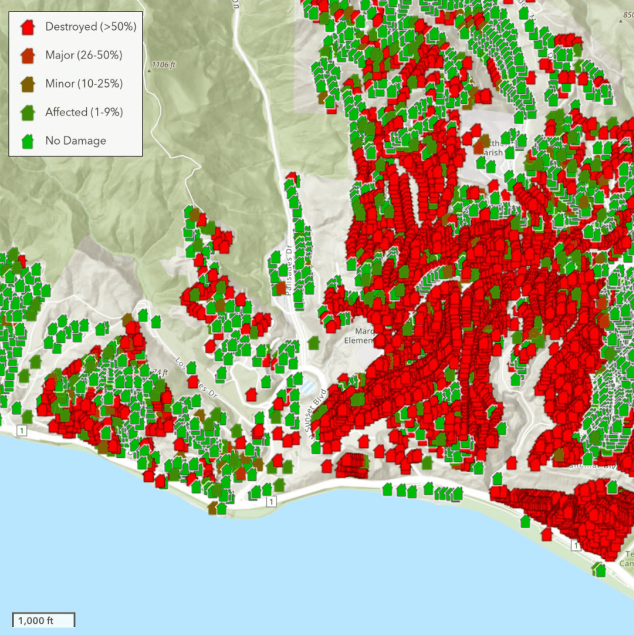

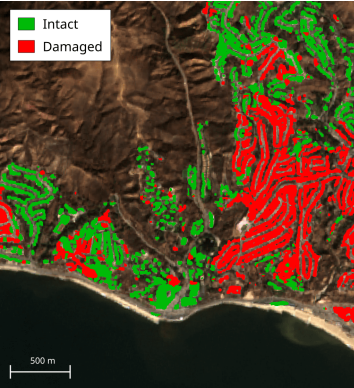

The Potential of Copernicus Satellites for Disaster Response:

Retrieving Building Damage from Sentinel-1 and Sentinel-2

Olivier Dietrich, Merlin Alfredsson, Emilia Arens, Nando Metzger, Torben Peters, Linus Scheibenreif, Jan Dirk Wegner, Konrad Schindler arXiv, 2025 arXiv We investigate whether medium-resolution Copernicus Sentinel-1 and Sentinel-2 imagery can support rapid building damage assessment after disasters. We introduce the xBD-S12 dataset and show that, despite 10 m resolution, building damage can be mapped reliably across many events, making Copernicus data a practical complement to limited very-high resolution imagery. |

|

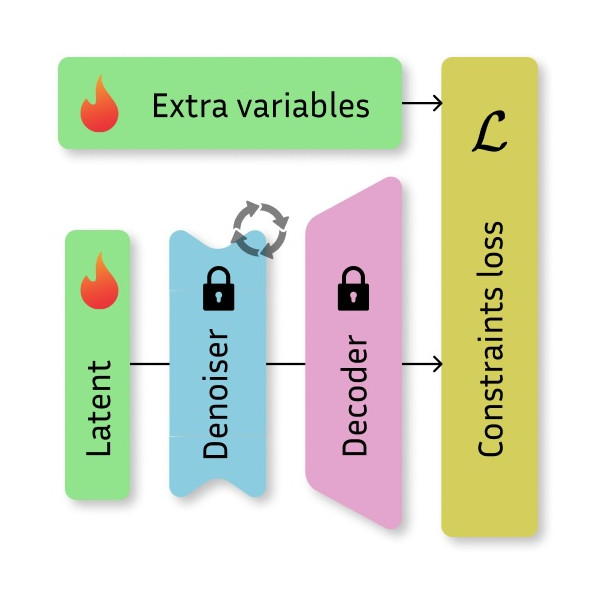

⇆ Marigold-DC: Zero-Shot Monocular Depth Completion with Guided

Diffusion

Massimiliano Viola, Kevin Qu, Nando Metzger, Bingxin Ke, Alexander Becker, Konrad Schindler Anton Obukhov, ICCV, 2025 project page / arXiv / code / demo Marigold-DC is a zero-shot depth completion frame work. We repurpose Marigold as an off-the-shelf monocular depth estimator and guide it with sparse depth observations. |

|

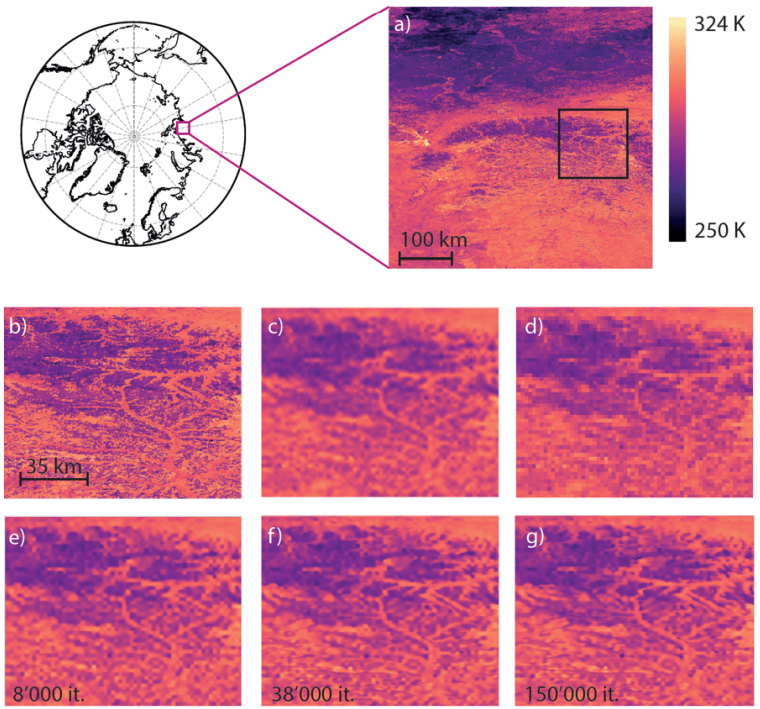

Four decades of circumpolar super-resolved satellite land surface

temperature data

Sonia Dupuis, Nando Metzger, Konrad Schindler, Frank Göttsche, Stefan Wunderle arXiv, 2025 arXiv We present a 42-year pan-Arctic land surface temperature dataset, downscaled from AVHRR GAC to 1 km resolution with a deep anisotropic diffusion super-resolution model trained on MODIS LST and guided by high-resolution land cover, elevation, and vegetation height. The resulting twice-daily, 1 km LST record enables improved permafrost and near-surface air temperature modelling, Greenland Ice Sheet surface mass balance assessment, and climate monitoring in the pre-MODIS era. |

|

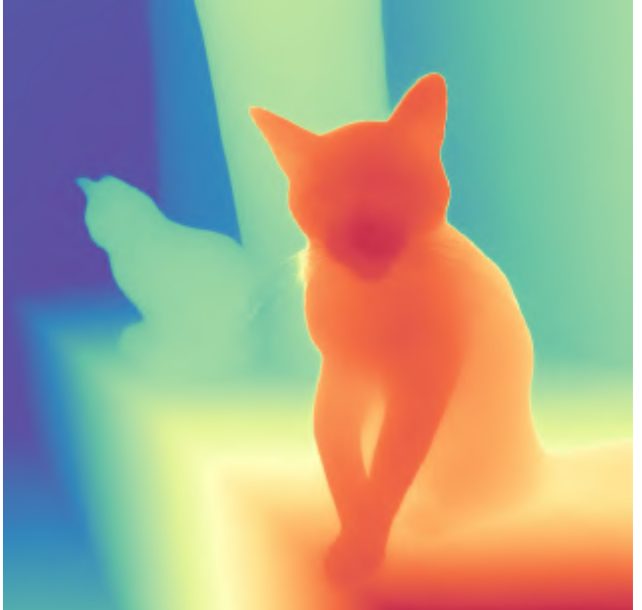

🌼Marigold: Affordable Adaptation of Diffusion-Based Image Generators

for Image Analysis

Bingxin Ke*, Kevin Qu*, Tianfu Wang*, Nando Metzger*, Shengyu Huang, Bo Li, Anton Obukhov, Konrad Schindler IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2025 arXiv / code / demo Marigold (TPAMI) generalizes the original CVPR'24 monocular depth estimator into a diffusion-based foundation model for dense prediction, supporting tasks such as depth, surface normals, and intrinsic image decomposition with only a few diffusion steps and efficient fine-tuning. |

|

🌼Repurposing Diffusion-Based Image Generators for Monocular Depth

Estimation

Bingxin Ke, Anton Obukhov, Shengyu Huang, Nando Metzger, Rodrigo Caye Daudt, Konrad Schindler CVPR, 2024 (Oral, Best Paper Candidate) project page / arXiv / code / colab / demo Marigold is an affine-invariant monocular depth estimation method based on Stable Diffusion, leveraging its rich prior knowledge for better generalization and achieving state-of-the-art performance with significant improvements, even with synthetic training data. |

|

BetterDepth: Plug-and-Play Diffusion Refiner for Zero-Shot Monocular

Depth Estimation

Xiang Zhang, Bingxin Ke, Hayko Riemenschneider, Nando Metzger, Anton Obukhov, Markus Gross, Konrad Schindler, Christopher Schroers NeurIPS, 2024 Paper / arXiv / project BetterDepth is a plug-and-play diffusion-based refiner that boosts the performance of any SOTA zero-shot monocular depth estimation method. |

|

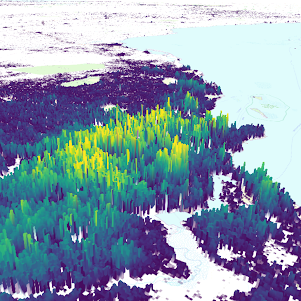

🍿POPCORN: High-resolution Population Maps Derived from Sentinel-1 and

Sentinel-2🛰️

Nando Metzger, Rodrigo Caye Daudt, Devis Tuia Konrad Schindler Remote Sensing of Environment, 2024 project page / code / arXiv / demo / data POPCORN is a lightweight population mapping method using free satellite images and minimal data, surpassing existing accuracy and providing interpretable maps for mapping populations in data-scarce regions. |

|

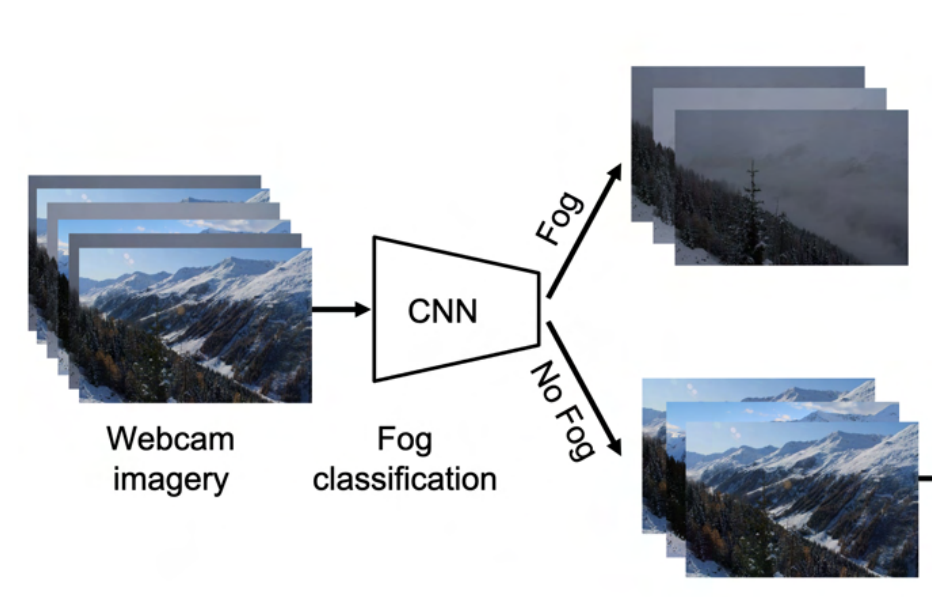

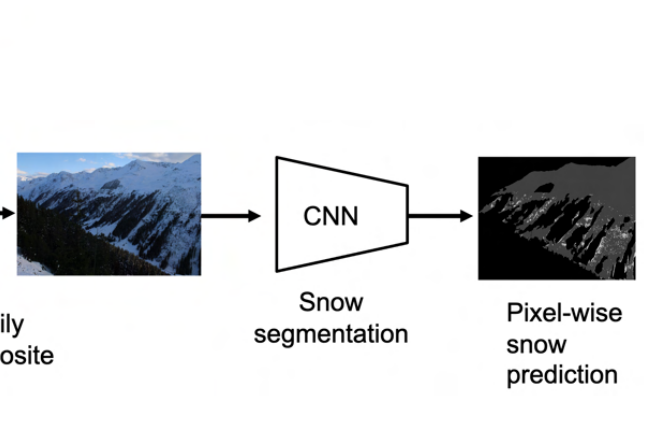

Automatic Image Compositing and Snow Segmentation for Alpine Snow Cover

Monitoring

Janik Baumer, Nando Metzger, Elisabeth D Hafner, Rodrigo Caye Daudt, Jan Dirk Wegner, Konrad Schindler IEEE Swiss Conference on Data Science (SDS), 2023, paper We automate SLF's ground-based snow cover monitoring pipeline in the Dischma valley by combining deep learning-based fog classification with pixel-wise snow segmentation for ground camera imagery. Our approach removes manual thresholds, generalizes across multiple cameras, and enables more scalable, reliable alpine snow cover mapping to support avalanche research and satellite product validation. |

|

🔥Thera: Aliasing-Free Arbitrary-Scale Super-Resolution with Neural

Heat Fields

Alexander Becker, Rodrigo Caye Daudt, Dominik Narnhofer, Torben Peters, Nando Metzger, Jan Dirk Wegner, Konrad Schindler Transactions on Machine Learning Research (TMLR), 2025 project page / arXiv / code We introduce neural heat fields, a neural field formulation that inherently models a physically exact point spread function, enabling analytically correct anti-aliasing at any super-resolution scale without extra computation. Building on this, Thera achieves aliasing-free arbitrary-scale single image super-resolution, substantially outperforming previous methods while remaining parameter-efficient and supported by strong theoretical guarantees. |

|

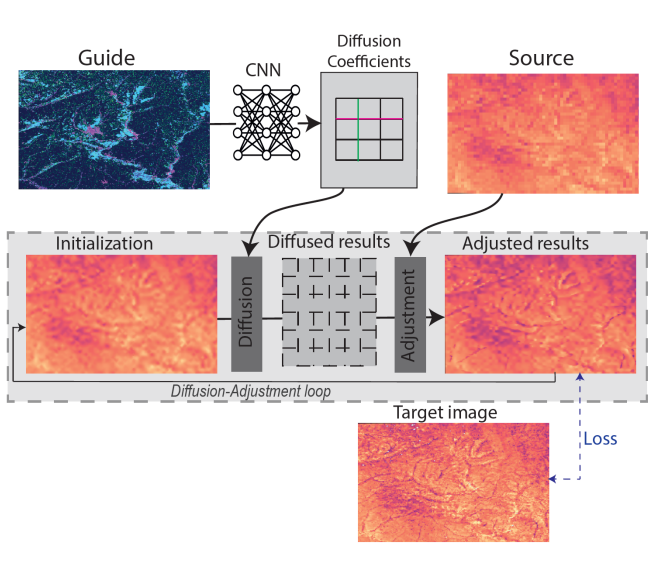

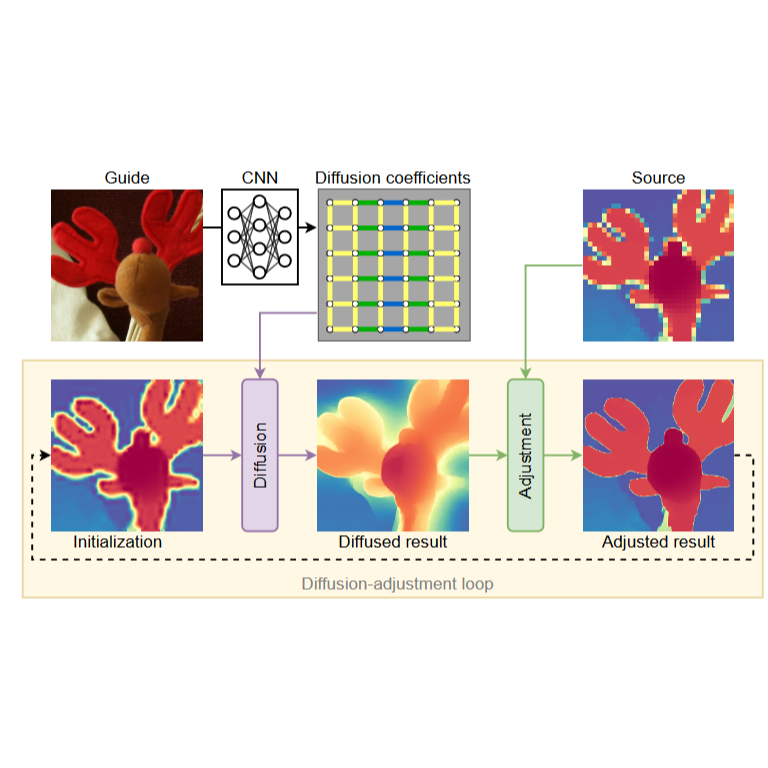

🦌DADA: Guided Depth Super-Resolution by Deep Anisotropic

Diffusion

Nando Metzger*, Rodrigo Caye Daudt*, Konrad Schindler CVPR, 2023 arXiv / paper / project page / video / poster We propose DADA, a novel approach to depth image super-resolution by combining guided anisotropic diffusion with a deep convolutional network, enhancing both edge detail and contextual reasoning. This method achieves unprecedented results in three benchmarks, especially at larger scales like x32 |

|

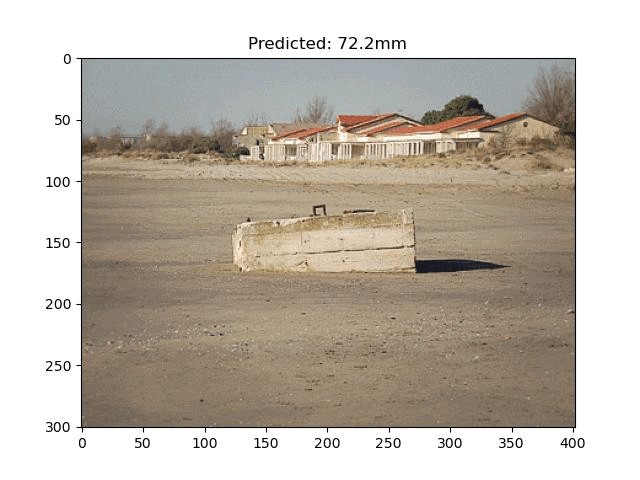

MLFocalLengths: Estimating the Focal Length of a Single Image

Nando Metzger GitHub Project, 2023 code Information about the focal length with which a photo is taken might be obstructed (internet photos) or not available (vintage photos). Inferring the focal length of a photo solely from a monocular view is an ill-posed task that requires knowledge about the scale of objects and their distance to the camera - e.g. scene understanding. I trained a deep learning model to acquire such scene understanding to predict the focal length and open-source the model with this repository. |

|

🟡POMELO: Fine-grained Population Mapping from Coarse Census Counts and

Open Geodata

Nando Metzger, John E Vargas-Muñoz, Rodrigo Caye Daudt, Benjamin Kellenberger, Thao Ton-That Whelan, Muhammad Imran, Ferda Ofli, Konrad Schindler, Devis Tuia Nature - Scientific Reports, 2022 project page / video / arXiv / community dataset POMELO is a deep learning model that creates fine-grained population maps using coarse census counts and open geodata, achieving high accuracy in sub-Saharan Africa and effectively estimating population numbers even without any census data. |

|

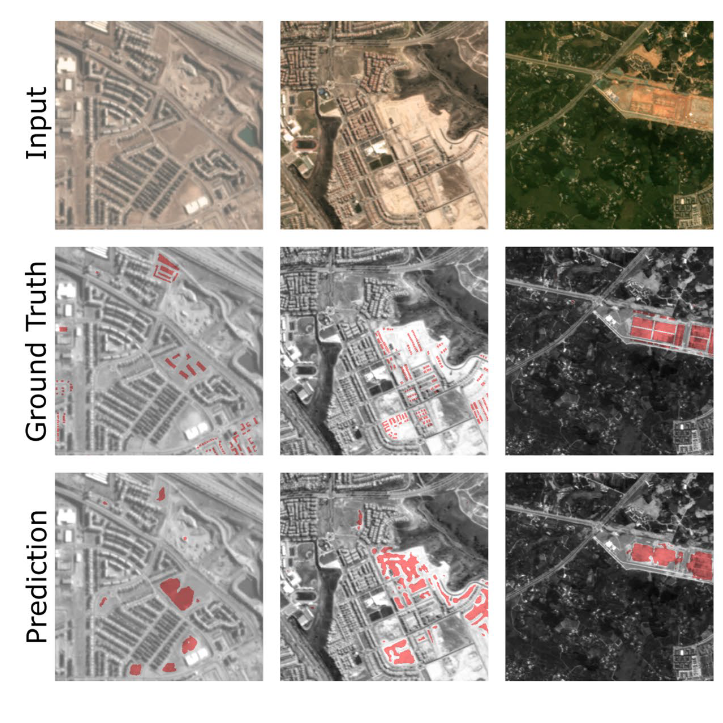

Urban Change Forecasting from Satellite Images

Nando Metzger, Mehmet Ozgur Turkoglu Rodrigo Caye Daudt, Jan Dirk Wegner, Konrad Schindler, PFG, 2022, (Karl-Kraus Best Paper Award) paper We propose a method for forecasting the emergence and timing of new buildings using a deep neural network with a custom pretraining procedure, validated on the SpaceNet7 dataset. |

|

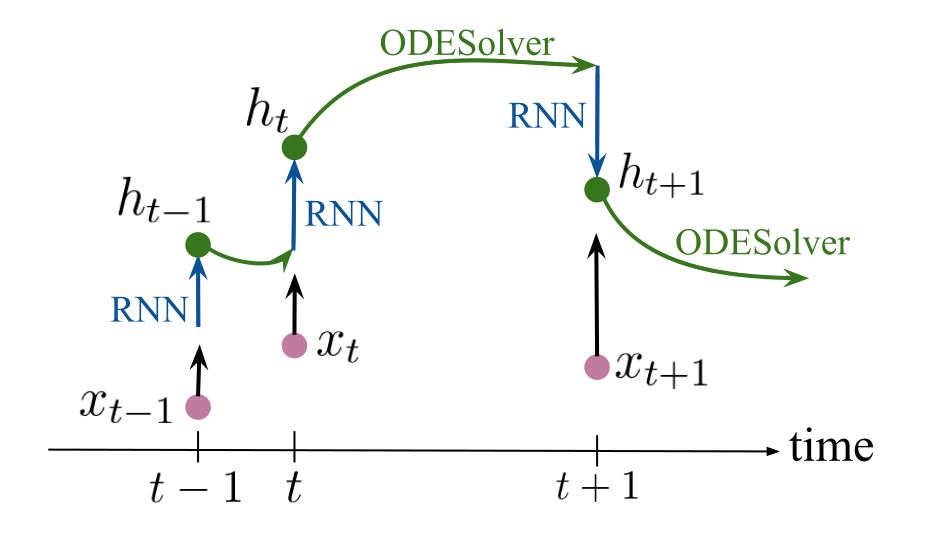

Crop Classification under Varying Cloud Cover with Neural Ordinary

Differential Equations

Nando Metzger*, Mehmet Ozgur Turkoglu*, Stefano D'Aronco, Jan Dirk Wegner, Konrad Schindler, IEEE, TGRS, 2021 paper We propose using neural ordinary differential equations (NODEs) combined with RNNs to improve crop classification from irregularly spaced satellite images, showing enhanced accuracy over common methods, especially with few observations, and better early-season forecasting due to the continuous representation of latent dynamics. |

|

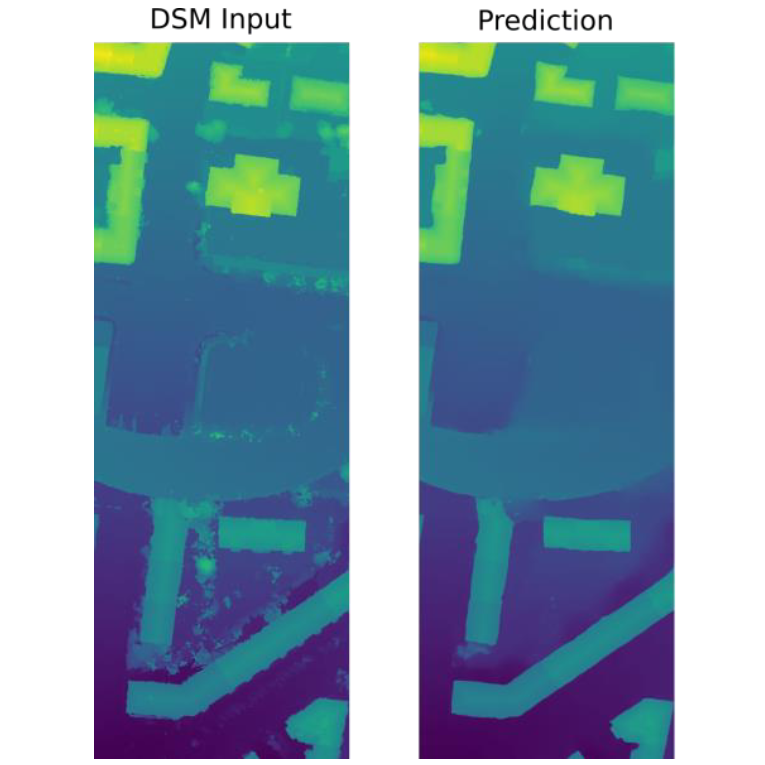

DSM Refinement with Deep Encoder-Decoder Networks

Nando Metzger, Corinne Stucker, Konrad Schindler, arXiv, 2020 paper This work presents a method for automatically refining 3D city models generated from aerial images by using a neural network trained with reference data and a loss function to improve DSMs, effectively preserving geometric structures while removing noise and artifacts. |

News

|

|

Thank you for the template Jon Barron. |